#however when it is subject to the constraints of its purpose as a machine

Explore tagged Tumblr posts

Text

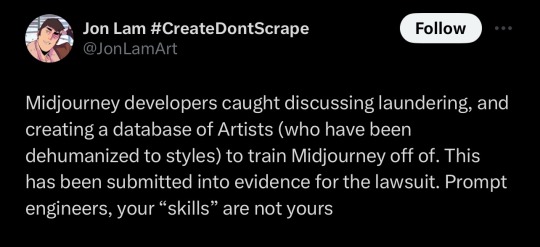

I think this part is truly the most damning:

If it's all pre-rendered mush and it's "too expensive to fully experiment or explore" then such AI is not a valid artistic medium. It's entirely deterministic, like a pseudorandom number generator. The goal here is optimizing the rapid generation of an enormous quantity of low-quality images which fulfill the expectations put forth by The Prompt.

It's the modern technological equivalent of a circus automaton "painting" a canvas to be sold in the gift shop.

so a huge list of artists that was used to train midjourney’s model got leaked and i’m on it

literally there is no reason to support AI generators, they can’t ethically exist. my art has been used to train every single major one without consent lmfao 🤪

link to the archive

#to be clear AI as a concept has the power to create some truly fantastic images#however when it is subject to the constraints of its purpose as a machine#it is only capable of performing as its puppeteer wills it#and these puppeteers have the intention of stealing#tech#technology#tech regulation#big tech#data harvesting#data#technological developments#artificial intelligence#ai#machine generated content#machine learning#intellectual property#copyright

37K notes

·

View notes

Text

TAFAKKUR: Part 330

OLFACTION: SENSING THE SCENTS: Part 2

E-NOSE TECHNOLOGIES

The sensor is the e-nose's key element, and the sensor type is its defining characteristic. There are 5 types of e-nose sensors, as follows:

Optical sensors: Optical fiber sensors work through fluorescence and chemoluminescence. The tube's glass fibers contain a thin encoated active material in their sides and at both ends. As VOCs interact with the organic matrix's chemical dyes, the dye's fluorescent emission changes the spectrum. These changes then are measured and recorded for different odorous particles.

Fiber arrays with different dye mixtures can be used as sensors. These are fabricated by dipcoating (binding a plastic solution to a substrate), micro electromechanical system (MEMS), and precision machining. The main advantage is that this adjustable tool can filter out noise. Also, since many dye forms are available in biological research, sensors are cheap and easy to fabricate. But the instrumentation control systems are complex, which adds to the cost, and have a limited lifetime due to photo bleaching (the sensing process slowly consumes the fluorescent dyes).

Optical sensors are sensitive and can measure low ppb (parts per billion); however, they are still in the researach stage of development

Spectrometry-based Sensors: This group consists of a molecular spectrum-based gas chromatography (GC), an atomic mass spectrum-based mass spectrometry (MS), and a transmitted light spectrum-based light spectrum (LS). The first two can analyze the odor's components accurately, which is a plus. However, their use of a vapor trap to increase concentration can alter the odor's characteristics. LS devices do not consume the sample, but do require tunable quantum-well devices. GC and MS devices are commercially available, while LS devices are only at the research stage. All spectrometry-based sensors are fabricated by MEMS and precision machining, and can measure odors to a low ppb level.

The GC tube decomposes the odorant into its molecular constituents, and MS forms a mass spectrum for each peak. The spectra then is compared to a large precompiled database of spectral peaks to classify and identify odorants.

MOSFET (Metal-oxide-silicon field-effect-transistor): The basic principle here is capacitive charge coupling. In other words, VOCs react with the catalytic metal and thereby alter the device's electrical properties. The device's selectivity and sensitivity can be fine-tuned by varying the metal catalyst's thickness and composition. MOSFETs are micro-fabricated and commercially available, but can measure only parts per million. They can be manufactured by electronic interface circuits, which minimizes batch-to-batch variation. However, the gas produced by the VOC-metal reaction must penetrate the MOSFET's gate.

Conductivity Sensors: The sensor types used here are metal oxide or conducting polymer. Both operate on the principle of conductivity, for their resistance changes as they interact with VOCs. Metal oxide sensors are common, commercially available, inexpensive, and easy to produce (they are micro-fabricated). Their sensitivity ranges from 5-500 ppm. However, they only operate at high temperatures (200°C to 400°C).

In conducting polymer sensors, VOCs bond with the polymer backbone and change the polymer's conductivity (resistance). They are micro-fabricated together with electroplating and screen printing, are commercially available, and can measure from .1 to 100 ppm. They operate at room temperature, yet are very sensitive to humidity. Moreover, it is hard to electropolymerize the active material, which makes batch-to-batch variation inevitable. Sometimes VOCs penetrate the polymer chain, which means that the sensor must be returned to its neutral and reference state-a very time-consuming process.

Piezoelectric Sensors: These devices, which measure any change in mass, come in two varieties: quartz crystal microbalance (QCM) and surface acoustic wave (SAW) devices.

QCM sensors have a resonating disk and metal electrodes on each side. While applying the gas sample to the resonator's surface, the polymer surface absorbs VOCs from the environment. Thus its mass increases, which increases resonance frequency. As the U.S. Navy has long used QCMs, this technology is familiar, developed, and commercially available. A QCM sensor is fabricated by screen-printing, wire bonding, and MEMS. Althoug it can measure a 1.0 Ng mass change, its MEMS fabrication and interface electronics is a major disadvantages. QCM sensors are quite linear in mass changes, their sensitivity to temperature can be adjusted, and their response to water can vary for the material used.

MEMS techniques should be handled carefully, for the surface-to-volume ratio increases drastically as dimensions approach the micrometer levels. Measurement accuracy is lost when the increasing surface-to-volume ratio begins to degrade the signal-to-noise ratio. This problem occurs in most micro-fabricated devices. SAW devices have much higher frequencies. Since 3-D MEMS processing is unnecessary, SAW devices are cheaper. As with QCM devices, many polymer coatings are available. The differential devices can be quite sensitive. However, interface electronics require more complex electronics than those of conductivity sensors for both QCM and SAW sensors. Also, as the active membrane ages, resonance frequencies can drift and so must be detected for frequency by time. SAW devices are commercially available and sensitive to mass changes at the 1.0 pg level.

PATTERN RECOGNITION

Any e-nose's primary task is to identify an odorant and perhaps measure its concentration. After the signal processing step comes the crucial step of pattern recognition: preprocessing, feature extraction, classification, and decision-making. A database of odors must be formed for comparison purposes.

Preprocessing accounts for sensor drifts and reduces sample-to-sample variation. This can be done by normalizing sensor response ranges, manipulating sensor baselines, and compressing sensor transients.

Feature extraction involves dimensionality reduction, a crucial step for statistical data analysis, since the database's examples usually are subject to financial constraints. The higher dimensionality caused by sensor arrays is reduced to relevant pattern-recognition information and thus extracts only significant data. As most dimensions are correlated and dependent, it is better to reduce dimensionality to a few informative axes.

Feature extraction usually is accomplished by classical principal component analysis (PGA) or linear discriminant analysis (LDA). PCA is a linear transformation that finds the maximum variance projections and the most widely used technique for feature extraction. But as PCA ignores class labels, it is not an optimal technique for odor recognition.

LDA seeks to maximize the distance between class label examples and minimize the within distance, and thus is a more appropriate approach. LDA is also a linear transformation. For instance, LDA might better discriminate subtle but crucial odor projections, whereas PCA can remove the high variance random noise in a projection.

The classification stage identifies odors. Classical classification techniques are KNN (k nearest neighbors), Bayesian classifiers, and ANN (artificial neural networks]. KNN with, say, 5 nearest points will find the 5 closest matches from the precompiled database. The closest match will be assigned as the tested material's odorant class.

Bayesian classifiers first assign a posterior probability to the classes in the lower dimension and then pick the class that maximizes the predetermined probability distribution. ANN is closer to biological odor recognition. After being trained by the odor database, it is exposed to the unknown odorant in order to recognize the largest applicable response odorant class. The classifier estimates the class and places a confidence level on it.

In decision-making, risks and application-specific knowledge are considered in order to modify the classification. All decisions are reported-even a nonmatch.

CONCLUSION

As this article indicates, we can expect great progress in this area. And with each step forward, science and technology will continue to point toward the Greatest Artist's most subtle designs and allow us to appreciate them better.

#allah#god#prophet#Muhammad#quran#ayah#islam#muslim#muslimah#help#hijab#revert#convert#religion#reminder#hadith#sunnah#dua#salah#pray#prayer#welcome to islam#how to convert to islam#new convert#new muslim#new revert#revert help#convert help#islam help#muslim help

1 note

·

View note

Text

Review: The Bhagavad Gita & Personal Choice

At once a down-to-earth narrative and a multifaceted spiritual drama, the Gita bears a concrete timelessness, with its magnetism lying in the fact that it is a work not of religious dogma, but of personal choice.

For years, the Bhagavad Gita has distinguished itself as a masterpiece of spiritual and philosophical scripture. Beautifully condensing Upanishadic knowledge, the Gita traverses a number of subjects – from duty as a means toward liberation (moksha), to the risks of losing oneself to worldly temptations, to the dichotomy between the lower self (jiva) and the ultimate, eternal Self (atman) – as well as detailing a vast spectrum of human desires, treating them not as one-dimensional abstractions, but as the complex combinatorial dilemmas they are in real life. Written in the style of bardic poems, the Gita bears a concrete timelessness, with its magnetism lying in the fact that it is a work not of religious dogma, but of personal choice.

Recounting the dialogue between Arjuna, the Pandava warrior-prince, and Lord Krishna, his godling charioteer, the text covers a wide swath of Vedantic concepts which are then left for Arjuna to either follow or reject. This element of personal autonomy, and how it can lead to self-acceptance, environmental mastery and finally the spiritual path to one's true destiny, is an immensely alluring concept for readers today. But Arjuna's positioning is the real crux of the Gita: his conflicts between personal desire and sacred duty are the undercurrent of the tale, and the text offers equally spiritual and practical insights in every nuance.

The Gita begins with two families torn into different factions and preparing for battle. The sage Vyasa, who possesses the gift of divine vision, offers to loan the blind King, Dhritarashtra, his ability so the King may watch the battle. However, Dhritarashtra declines, having no wish to witness the carnage – particularly since his sons, the Kauravas, are arrayed on the battlefield. Instead, the sage confers his powers to Sanjaya, one of Dhritarashtra's counselors, who faithfully recounts the sequence of events as they unfold. From the start, readers' introduction to the Gita is almost sensory, with the battlefield stirred into action by Bhishma, who blows his conch horn and unleashes an uproarious war-frenzy, "conches and kettledrums, cymbals, tabors and trumpets ... the tumult echoed through heaven and earth... weapons were ready to clash." These descriptions serve as marked contrasts to the dialogic exchange that follows between Arjuna and Krishna, which is serene and private in tone, the two characters wearing the fabric of intimate friendship effortlessly as they are lifted out of the narrative, suspended as if in an aether where the concept of time becomes meaningless.

Arjuna – whose questions carry readers through the text – stands with Krishna in the heart of the battlefield, between the two armies. However, when he sees the enemy arrayed before him, "fathers, grandfathers, teachers, uncles, brothers, sons, grandsons and friends," he falls into the grip of a moral paralysis. His whole body trembles and his sacred bow, Gandiva, slips from his hands. He tells Krishna, "I see omens of chaos ... I see no good in killing my kinsmen in battle... we have heard that a place in hell is reserved for men who undermine family duties." As Jacob Neusner and Bruce Chilton remark in the book, Altruism in World Religions, "To fight his own family, Arjuna realizes, will violate a central tenet of his code of conduct: family loyalty, a principle of dharma." The concept of dharma holds an integral place in Hindu-Vedantic ethos, with Sanatana Dharma (eternal and universal dharma) regarded as a sacred duty applicable to all, and Swadharma (personal and particular dharma) sometimes coming into conflict with the former. It is this inherent contradiction that catalyzes Arjuna's self-doubt. "The flaw of pity blights my very being; conflicting sacred duties confound my reason."

It is Krishna who must inspire him to fight, through comprehensive teachings in the essentials of birth and rebirth, duty and destiny, action and inertia. There is an allegorical genius here that will appeal tremendously to readers. The military aspects of the Gita can easily serve as metaphors for not just external real-life battles, but internal battles of the self, with the two armies representing the conflict between the good and evil forces within each of us. In that sense, Krishna's advice to Arjuna – the seven-hundred slokas – becomes a pertinent, pragmatic guide to human affairs. With each verse, both Arjuna and the readers are offered perspectives and practices which, if followed, can allow them to achieve a robust understanding of reality.

With the spiritual underpinnings of the wisdom known as Sankhya, Krishna explains different yogas, or disciplines. Readers slowly begin to encounter all the components of humanity and the universe, through the lens of Arjuna, whose moral and spiritual weltanschauung undergoes a gradual metamorphosis – from, "If you think understanding is more powerful than action, why, Krishna, do you urge me to this horrific act? You confuse my understanding with a maze of words..." to "Krishna, my delusion is destroyed... I stand here, my doubt dispelled, ready to act on your words."

We are introduced in slow but mesmerizing detail to the wisdom within Arjuna himself; an omniscience that eluded him because it was hidden beneath illusion, or maya. Indeed, Krishna makes it clear that the very essence of maya is to conceal the Self – the atman – from human understanding by introducing the fallacy of separation, luring individuals with the promise that enlightenment springs not from within but from worldly accouterments: in sensual attraction, in the enticements of wealth and power. However, Krishna makes it clear that the realm of the senses, the physical world, is impermanent, and always in flux. Whereas he, the supreme manifestation of the divine and the earthly, the past, present and future, is there in all things, unchanging. "All creatures are bewildered at birth by the delusion of opposing dualities that arise from desire and hatred."

Although these themes are repeated often throughout the Gita, in myriad ways, not once do they become tiresome. Although Krishna's role in much of the Mahabharata is that of a Machiavellian trickster, invested in his own mysterious agenda, he does eventually reveal himself to Arjuna as the omniscient deity. Yet never once does he coerce Arjuna into accepting his teachings, though they are woven inextricably and dazzlingly through the entirety of the Gita. Rather, he gives Arjuna the choice to sift through layers of self-delusion and find his true Self. This can be achieved neither through passive inertia, nor through power-hungry action, but through the resolute fulfillment of duty that is its own reward. In order to dissolve the Self, the atman, into Brahman and achieve moksha, it is necessary to fight all that is mere illusory temptation. Just as Krishna promises Arjuna, victory is within reach, precisely because as a Kshatriya-warrior, it is his sacred duty – his destiny – to fight the battle. More than that, the desire to act righteously is his fundamental nature; the rest is pretense and self-delusion. "You are bound by your own action, intrinsic to your being ... the lord resides in the heart of all creatures, making them reel magically, as if a machine moved them."

Although the issues that Arjuna grapples with often become metaphysical speculations, never once does it dehumanize his character. His very conflict between the vacillations of the self and sacred duty assure his position as something greater and more complex than a mere widget fulfilling Krishna's agenda. It is through the essence of Arjuna's conflict that he grows on a personal and spiritual level. Conflict so personal and timeless is inextricably tied to choice. In Arjuna's case, the decision to shed the constraints of temporal insecurities and ascend toward his higher Self – freed from the weight of futile self-doubt and petty distractions – rests entirely in his hands. Krishna aids him through his psychospiritual journey not with a lightning-bolt of instantaneous comprehension, but through a slow unraveling of illusions so that Arjuna will arrive at a loftier vantage, able to reconnect with his true Self, and to remember his sacred duty. The answers are already within him; the very purpose of Krishna's counsel is merely to draw it out. "Armed with his purified knowledge, subduing the self with resolve, relinquishing sensuous objects, avoiding attraction and hatred... unposessive, tranquil, he is at one with the infinite spirit."

At its core, the Bhagavad Gita is timelessly insightful and life-affirmingly human, an epic that illustrates the discomfiting truths and moral dilemmas that continue to haunt modern-day readers. Despite its martial setting, it is fueled not by the atrocities of battle, but overflowing with the wisdoms of devotion, duty and love. Its protagonist is inclined by lingering personal attachments, but compelled by godly counsel, to surpass both the narrow private restrictions of self-doubt and the broad social framework of family, in order to reconnect with his pure, transcendental Self. However the Gita does not offer its teachings as rigid doctrine, but as a gentle framework through which readers can achieve a fresh perspective on the essential struggles of humankind. At once a down-to-earth narrative and a multifaceted spiritual drama, the Gita bears a concrete timelessness, with its magnetism lying in the fact that it is a work not of religious dogma, but of personal choice.

1 note

·

View note

Text

The social acceleration landscape and digital capitalism are both promoted, and the trend of "accelerationism" emerges at the historic moment.

If social acceleration is a frequency perception and accelerating society is a cognitive framework for social acceleration, then accelerationism is a theoretical proposition with a distinct attitude.

Although the perception of speed has always accompanied the process of modernization, philosophy and modernity criticism have traditionally not been optimistic about its success, but concerned about it. The dichotomy constructed by modern social theory is that machine, technology and speed are on one side, while humanity, culture and soul are on the other. The former has squeezed and destroyed the latter, resulting in human alienation. This point is still evident in the criticism of social acceleration logic by German scholar Hartmut Rosa.

Accelerationism eliminates such antagonism and tension. It holds that capitalism enslaves technology and science, and that the potential productive forces should be liberated, and all the science and technology developed in capitalist society should be used to accelerate the process of technological development, thus triggering social struggles and realizing the blueprint of post-capitalism. The Accelerationist Manifesto, which emerged in 2013, stands out as an anti-neoliberal platform of the Western radical left.

It is generally believed that the accelerationist trend of thought has a historical connection with the left-wing thought in France in the 1970s. After the failure of the "May Tempest" in 1968, French thinkers argued that they should move further in the direction of market movements than capitalism. In the 1990s Nick Land, a British philosopher, argued that politics was out of date and that confrontational tactics should be abandoned and the logic of capitalism accelerated until humans became a drag on intelligence on the planet.

That is to say, accelerationism essentially has two wings. The left's accelerationist vision is to bury capitalism through technology. The right's accelerationist vision is that technology and capital are naturally combined and that all constraints should be lifted to achieve infinite acceleration. The accelerationists of the left took capitalism as their opponent, arguing that unblocking technology would lead to its collapse and the birth of a new form of human society. Right-wing accelerationism shares the economic logic of capitalism by giving technology unlimited space, with the result that humans may be eliminated as "backward productive forces" but "superhumans" continue to operate on the profit track.

Like Liotard's "libido economics", left-wing accelerationism, though with a vision of a new society, accelerates into a "suicide attack" risky game. After the failure of May Storm, Lyotta believed that people could no longer find forms of fulfillment outside the libido, and that workers could only experience pleasure in using their bodies frantically. The accelerationists of the left argue that "we are betting on the untapped transformative potential of scientific research". Most people may not question this today, but how can we believe that the transformative potential that has been unleashed has destroyed only the capitalist order rather than the entire society, and that from the ruins there is a chance for people to rebuild? Will the capitalist system, or ecology, climate, resources and humanity, be the first to be destroyed by infinite acceleration?

When we say that science and technology is the primary productive force, the implicit premise is that it is used in accordance with human purposes, otherwise science and technology may be the primary destructive force. Today, this concern is not unfounded. Productivity is the core of people, is laborer main body, to accelerate the people has formed great extrusion conditions, further speed up more and more depend on the technology itself, then accelerate socialist may be brought about by the people, can only adapt to the scale of the technology, people will be left out in the social process, at least from control evacuation process. In the future prospect of accelerationism, the "post-capitalist society" is not envisaged, and human freedom, liberation and all-round development are not the subject. As far as social ideals are concerned, the left-wing accelerationism is not even a pie painting. The struggle strategies envisaged by it, such as the establishment of knowledge base, mass control of the media, cohesion of the scattered proletariat, and the proposal of social and technological leadership, are actually weak and weak. It is also in danger of being absorbed by the right-wing accelerationism and becoming a supporting role.

As for right-wing accelerationism, it advocates the "technology-capital alliance". Therefore, even though it believes that the existing capitalist system restricts the development of technology, it does not intend to deny the logic of capitalism, but believes that the acceleration of technology is bound to produce the strongest, that is, the best social system. What human beings pursue should not be "meaningless affairs" such as equality, democracy and pluralism, but the realization of technology-capital. Even if human beings are replaced, it is natural and reasonable. In this way, right-wing accelerationism completely abandons "human being is the end", and puts technology in the position of noumenon, and capital becomes the guarantee condition of the noumenon of technology.

The two classical cognitive constructs of time and space have been disintegrated into "acceleration" by modern technology, and the real experience of time and space has been displaced into digital experience. Faced with this rapid change, modern philosophy and theory are beginning to lose ground. Technology anxiety is receding from the mainstream. Technology criticism, cultural criticism, class analysis, capital crusade, etc., are all becoming less vocal. While technology is showing great unpredictability, blind expectations of good things are on the rise, and accelerationism can be seen as a typical example. It is worth noting that accelerate socialist envisioned the future, with the existing capitalist order of subversion, but people did not promised will be the main body status, and free to build in the development of social relations, but have been promised a chance to rebuild the social, even been promised a replaced by "the superhuman" outlook.

Accelerationism takes Marx's "machine theory fragment" as its theoretical source. The so-called "Machine Theory Fragment" appeared in the Grammy of the Grundrisse of Political Economy. Marx wrote: "The development of fixed capital shows the extent to which general social knowledge has become a direct productive force, and thus the extent to which the conditions of the social process of life are themselves controlled by and modified in accordance with general intelligence." Automatic machine as a general knowledge into the society, make the living labor secondary link in production, the scale and the source of labor time is wealth, and technology make the wealth does not depend on people's labor time, but not out of capitalist production relations, the machine enter may be knocked out, and not necessarily the increase of free time.

On the whole, Marx has always been concerned about the liberation and free development of human beings. The relationship between technology, labor and capital has been constantly clarified and completed in Das Kapital. Accelerationism, however, is not so much a theory of how man achieves freedom and liberation under technological conditions as a theory of how man should ensure that technology achieves its goals. Frankly, on both the accelerationist left and the accelerationist right, what I see is an ardent argument for making technology happen, for making it accelerate even further, but it's hard to see a deep concern for human development.

1 note

·

View note

Text

Classic car insurance in Texas, USA with 40 % off

People have difficulty controlling their excitement when having a ride in a vintage car with a sunroof during afternoons in December. Yelling and shouting, taking it to the coastal drive in the company of best friends, will surely leave behind some joyful moments of their lives. But, don’t let this lovely time become your nightmare with the intervention of traffic police. Once check your policy papers for classic auto insurance in Texas.

Is your policy expired?

Are you missing your liability cover?

Are you carrying the right policy for your classic automobile?

If not meeting any of these conditions, perhaps you will be the subject of legal abduction. Hey, are you scared? Relax, at least we can help you in avoiding such circumstances. Step on the brakes of your running mind and read this post in detail.

How contracting is the scenario while shopping for classic auto insurance?

Definitely, you are a lucky car owner as it’s not only a vintage old school vehicle in your garage; it’s a diamond. Unlike ordinary cars, which usually depreciate, classic automobiles add on their cost with time and maintenance. Besides this paradoxical contrast, some other chief differences can be the worthy factors for your policy’s claim.

Be cool and pay a lower premium on driving your old antique machine

Contrarily regular vehicles that run on the road daily, classic cars are the babies of the buyers. Vintage car lovers take their vehicle out once in a blue moon. Due to good maintenance and extra care, these automobiles enlist under the collector’s vehicle insurance policy. Surprisingly collector vehicles save up to 2/3rd of the premium cost in comparison to traditional old vehicles. Further, the owners choose low coverage plans for the vehicles as they rarely drive them.

Does manufacturing date make your car a classic?

If you think that a vehicle categorized as a classic is over 50 years old, then you shall get your facts right. In reality, most classic car insurers have a condition of 15 years old for an automobile to qualify as collectible. However, there can be other reasons for the collections, such as special vehicles or limited editions. With this in mind and other relevant factors, for which conventional auto insurance policies do not have provision, a collector’s insurance policy defines itself. To refer: car insurers deny the insurance of vehicles older than 25 years which may have no problem for age under collector car insurance policy.

The difference in agreement on the settlement value

During the purchase of car insurance, there is a settlement for the claim of total loss. This establishment is known as the actual cash value settlement for traditional vehicles that the company decides after damage. In contrast, for this matter, there is a separate agreement by agreed value provision for collector car insurance policy. Under this agreement, you and the insurer decide on the collector car’s value by mutual consent.

Undertaking factors of restoration

As you know, those who buy old cars often restore their automobiles sooner or later. Some may wish to upgrade it for performance and looks, or others may want personalization. Certainly, these improvements make the automobile different from its manufacturing state.

Auto insurers generally estimate the claim amount based on factory-installed equipment.

Due to the modification of vehicles, regular auto insurers have limitations on the claim for repair and the settlement value in the case of total loss claims.

In comparison, the collectible vehicle policy for classic cars does not disappoint you with such constraints. Under the policy considers the various improvements to offer the right amount for repair and total loss claim. These improvements include upgraded engines, disc brakes, or suspension. Further, installing power steering, interior decors, air conditioning, and many more are also considered.

Choose the best repair spots with expertise in classic cars.

You may not get help from the regular insurance company when fixing faults in your vintage automobile. They may send you to any second repair shop owing to dissatisfaction in service. But the specialists working with us know all about the genuine spots of treatment for your baby to give it a new life. Our classic auto insurance company in Texas regularly reviews the best repairs for classic cars and recommends the right list of places.

Pay lower deductible than regular auto insurance

Besides, you have the option of paying a lower deductible amount on buying a collectible auto insurance policy. Here, in Contrast to regular car insurance, the deductible amount is quite low. You can pay anything between 0 to $ 250 according to your plan. So, if met with an accident, in that case, you are lucky indeed to spend substantially less.

Is my 1956 vintage Aston Martin eligible for car insurance?

As discussed earlier, it’s not the age that makes your vehicle classic; in fact, it’s your love and care for the vehicle, which you rarely drive for your pleasure. Companies consider several factors for the insurance of collectible car in Texas, such as:

Condition for automobile

The car is older than 15 years or 25 years in the case of some insurers.

Your insurer may ask for proof of an alternative car that you regularly use with attention to consider your policy vehicle as collectible.

Then, the car is having a good storage condition and pristine cleanliness in the garage. The company may ask for proof also.

A vehicle shall have a smoothly running engine too.

Classic automobiles should have low mileage per year. For this reason, you shall not drive it more than 7500 miles annually.

The car is designed for on-road use in particular.

Most important of all, no damage to the vehicle is acceptable under the collectible car insurance policy.

Conditions for drivers

Drivers shall have a clean driving record for five years at least.

Under the conditions of some companies, policyholders may have an age limit of 25 years.

List of vehicles ideal for classic auto insurance in Texas

Range of cars can qualify for a classic car insurance policy if having good maintenance as well as meeting all the legal conditions. Generally, these vehicles are insured:

Old military vehicles.

Then, vintage cars with manufacturing between the 1920s to 1950s.

Collectible cars of the period between the 1950s to 1990s.

Also, vehicles of limited editions and special models.

Fourthly, older farm vehicle collection for automobile shows and display purposes.

Know about the coverage and pricing of classic car insurance

What is under the coverage of a collectible car insurance policy?

The collectible car insurance works similarly to a typical one-year car insurance policy; instead, you have special provisions and limits of usage and mileage. Whether you need comprehensive collision or liability coverage, you get it all.

Under the extra protection, you can choose medical insurance, uninsured driver coverage, and breakdown insurance.

Moreover, those who own high-cost classic automobiles shall choose comprehensive coverage to be safe from damage and burglary. It indeed includes the coverage for liability and collision.

On the other hand, you may have an alternative of additional coverages for road voyages, spare parts, or roadside aid.

Individual companies have different rates; we advise you to compare rates by auto insurance quotes in Texas.

How costly is classic car insurance?

Under the pricing, it would be no wonder if you are saving 30 % extra on the insurance of your classic car.

The factors generally affecting the pricing of your classic car include:

The value of your vehicle because of modification and up-gradation.

Then, the driving record of the owner.

Thirdly, the area where you are living.

All in all, this significant difference in the cost of classic car insurance is due to the less usage of this vehicle than regular cars.

Good and bad of buying classic auto insurance

Benefits of classic car insurance

Low-cost policy: Above all, you may get it at a lower price than a typical car insurance policy. On average, policyholders spend 30 % less while getting our classic car insurance.

Coverage for spare parts: No worries about the damage or theft of spare parts such as gear and tires.

Get cash settlement: Is your classic car stolen or totaled? Be easy; your policy cover pays back the cash payment during such events.

Loss in your absence: In case your car is away from you, such as at the automobile show during the time of loss, you still qualify for coverage.

On-road support: Your vehicle needs on-road support to prevent it from damaging further. Classic car insurance policy includes the cost of towing the vehicle during such matters.

Medical reimbursement at an auto show: While someone sustains an injury in your car at the auto exhibition, this coverage saves you from liability.

Disadvantages of classic car insurance

Rare discount opportunities: One of the drawbacks of classic car insurance is that, unlike regular car insurance discounts, you hardly get an opportunity to grab a saving offer on a classic auto insurance policy. Since your insurer is a smaller niche company, so you mostly miss discounts for services such as bundling policies.

Limit on mileage: Another demerit is mileage limit; in this case, your insurer may have a condition to not drive beyond a certain distance to be eligible.

Lesser driving coverage choices: Some classic car insurers include coverage options like roadside support and extended medical coverage. However, these are sometimes not available with the smaller companies.

Policy change: Your classic car insurance can change when you own a car accordingly the rise and fall in its value. At the same time, typical car insurance plans have no such change. To refer: If you are restoring your automobile, after finishing a restoration, you have to adjust the duration of the policy once again.

Are you missing to know something about our classic auto insurance in Texas?

Shall I buy the insurance for my classic off-road car?

If you own an expensive classic car, we definitely recommend you to get an insurance policy, as it’s a valuable belonging. As an owner, you will sigh relief while taking care of your favorite automobile over the years.

What to do to cut the cost of classic auto insurance in Texas?

Compare rates from as many brokers as you can, and choose the one with reasonable cost but good value.

The value of the vehicle can be low or high but buy a policy as per agreed valuation.

Even more, try to join any club or society of classic cars. In the meantime, you will have timely offers of vehicle insurance at a lower cost.

Jiyo Insurance - Get the cheap and quick quotes for classic auto insurance in Texas

To sum up, jiyo insurance is the premier service provider for general auto and classic car insurance in Texas and throughout the US via digital presence.

Primarily we are based in Texas, and you can easily access us anywhere in the province at different locations in any event. Whether you need a quote in Dallas, Fort Worth, El Paso, policy in Austin, Arlington, service in San Antonio, Corpus Christi, or an affordable plan of classic auto insurance in Houston, follow us.

You may be owning muscle cars, exotic cars, Cobra replicas, antique cars, military vehicles, or street rods; of course, we accept all these vehicles for a classic auto insurance policy.

In a word, hurry up and grab the opportunity today to save up to 40 % on your classic car insurance.

#classic cars#classic car insurance in usa#classic car insurance in texas#auto insurance quotes#auto insurance in Texas#auto insurance in San Antonio#Auto insurance in Houston#Auto insurance in Dallas#Auto insurance in Austin

0 notes

Text

Critical Review

My work explores the concept of transformation. In the beginning it was my intention to capture the ephemeral, an idea which I had whilst on a walk. Walking had become a kind of therapy for me during the lockdown as I had been confined at home - I have been saying in jest for the past year I’m on house arrest and my walks are my yard time, but I concede that’s an over exaggeration, even if it felt like it sometimes. The act of walking was my slice of the day where I could be on autopilot; it allowed me the time to just walk and think. Being in nature, I was observing the plants and flowers and began collecting them. I wanted to preserve my collection, to shift them from ephemeral to permanent objects. I primarily used air drying clay to achieve this, which I pressed my flowers into to create moulds from which I could take a positive cast. I had spent a few months perfecting this technique and working to this process and had eventually stockpiled a collection of botanical tiles in different colours and sizes, but my concept had stagnated somewhat by this point, similarly my daily walking route which I had enjoyed had begun feeling like an obligation. The repetition became tedious and is analogous of how I was feeling during lockdown; autopilot had lost its novelty. I had realised that my daily practice was like a production line, where I was manufacturing my art in batches from a mould and repeating the process. I began thinking about the modern world and particularly how technology and mass manufacturing had played a vital role in the worldwide response to the pandemic. I was interested in where the line is drawn between functional design and a work of art and sought to explore this in my project, which saw its own transformation going forward. The everyday object reimagined as fine art has been a subject of intrigue among artists and art lovers since the early Twentieth Century. Since the ready-made’s of Marcel Duchamp, the bounds of art have been redefined. The subject expanded in the 1960’s with the emergence of the Pop Art movement, with such artists such as Claes Oldenberg and Andy Warhol who created whimsical replicas of common household items, transforming the functional object into ornamental sculpture. In particular, Warhol’s work was a response to consumer culture, which transformed certain household brands into art world icons synonymous with his name. By looking at Dadaism, Surrealism and Pop Art, we can see some of the varying ways in which objects have been used in the past. The object form has been used as a means of expression of self, as a form that can be metamorphosed into things created from imagination, as a technical means of expression, as a social statement on society, and as a means of creating art which questions art itself. (Hanna, 1988) Today, the everyday object as art remains a pervasive subject in contemporary art. Tokyo-based artist Makiko Azakami is one such artist who transforms everyday objects by using only paper for her lifelike sculptures; ‘through careful cutting and meticulous handcrafting, Azakami breathes new life into humdrum objects and creates pieces that are deceptively fragile and extraordinarily detail-oriented’ (Richman-Abdou, 2016). Korean artist Do Ho Suh creates lifesize object-replicas of fittings and appliances around his apartment using wire and polyester fabric netting. The use of these materials transforms the objects from functional to ornamental whilst retaining their defunct detail, reframing the domestic object, and wider domestic space, as sculpture. ‘The transparency of the fabrics…is important conceptually because I’m trying to communicate something of the permeability in the ways in which we construct ourselves’ (Suh, 2020). Other contemporary artists use found objects in their work, which serve a specific purpose that the artist abandons, choosing to elevate the mundane to the realm of fine art, and dissolve the boundaries between “high” and “low” forms of culture. (Artnet News, 2017). In my own practice, I chose to study the everyday object of the lightswitch, the idea of which was suggested to me during a presentation of my work. I had built my installation around a lightswitch on my studio wall, an unconscious choice on my part, perhaps going to show just how on autopilot I was. I was interested in replicating the lightswitches around my home using subversive materials and experimenting with installations. I began by taking clay impressions of the lightswitches around my home from which I could make positive casts. This made me think about automation; I felt that the repetition of taking casts from a mould was similar to a production line, and I was the machine, similar to Warhol’s production line process of silkscreen printing, as Bergin writes: ‘The Machine is, to the artist, a way of life, representative of a unique field of twentieth-century experience, and all of Warhol’s art is striving to express the machine in the machine’s own terms’ (Bergin, 1967). Perhaps all art has an agenda; is any art made just for the joy of it? Or is it just to fulfil some demand? I began to wonder if all art, except for the earliest cave paintings, was produced purely to be consumed. If Warhol’s brillo boxes represent the collapse of the boundary between artistic creation and mass production (Baum, 2008), then where exactly is the boundary? I came to the conclusion that any commodifiable artwork is a product, and creating art is just another form of production for consumption. When I had my finished clay tile with a porcelain effect painted finish, I installed it on my kitchen wall and it at first glance appears to be a standard lightswitch, however when examined up close the viewers expectation is subverted, as you can see that it is a handmade replica. The functional design of the lightswitch is reimagined through the materiality of natural clay, transforming the object from a functional design into an ornamental replica. I had made many clay lightswitches already, and wanted to explore other subversive materials to utilise. Inspired by Rachel Whitereads resin replicas of doors, I began making coloured resin casts from my existing silicone mould, adding a different coloured resin pigment each time. Displayed as stacked on backlit shelves, the work invites the viewer to peruse as though they were in a supermarket, highlighting that art is another form of production for consumption in the modern world. I then began thinking about scale, but this time I wanted to use the intangible material of light itself as my medium. Using my transparent coloured resin tiles and a light projector, I projected onto my studio walls. This opened up a door to working digitally, working with media such as video and photoshop. Working in this way allowed me to explore scale as freely as I liked without time or space constraints. I began thinking that digital media is an imitation of the real thing. My projections, for example, are not really lightswitches, they are replicas of lightswitches made from the material of refracted light waves. My video gifs and my photoshopped site specific work are just information converted to binary numbers and translated into pixels on a screen. I think in this way, digital and electronic media are the ultimate subversive material. Overall, my project experienced a dramatic transformation. In the beginning, lockdown had only just begun and it was a new experience. Fourteen drudgerous months later, I am not the same person I was then. The whole world has had its own dystopian transformation, where we now so heavily rely on technology to survive. We have surrendered authentic experience for a pale imitation of the real thing. Our New Normal is just a replica of the life we left behind, subversive in the way that at a superficial glance all remains the same, but on closer inspection is just a substitution. As I made my tiles I was a machine, so too have we become machine men; just pixels on a screen or voices on the end of a line, a replica of the blood and cells and sinew and breath and acne that made us really human.

Artnet News In Partnership With Cartier, (2017) ‘11 Everyday Objects Transformed Into Extraordinary Works of Art’, artnet.com. Article published May 9, 2017. Available at: https://news.artnet.com/art-world/making-art-from-mundane-materials-900188.

Baum, R (2008) ‘The Mirror of Consumption’, essay published in Andy Warhol by Andy Warhol . Available at: https://www.fitnyc.edu/files/pdfs/Baum_Warhol_Text.pdf. p.29.

Bergin, P (1967) ‘Andy Warhol: The Artist as Machine’, Art Journal XXVI, no.4. Available at: https://www.yumpu.com/en/document/read/8274194/andy-warhol-the-artist-as-machinepdf-american-dan. p.359.

Hanna, A (1988) OBJECTS AS SUBJECT: WORKS BY CLAES OLDENBURG, JASPER JOHNS, AND JIM DINE, Colorado State University Fort Collins, Colorado, Spring 1988. Available at: https://mountainscholar.org/bitstream/handle/10217/179413/STUF_1001_Hanna_Ayn_Objects.pdf?sequence=1

Richman-Abdou, K (2016) ‘Realistic Paper Sculptures of Everyday Objects Transform the Mundane Into Works of Art’ , Mymodernmet.com. Article published October 20, 2016. Available at: https://mymodernmet.com/makiko-akizami-paper-sculptures/?context=featured&scid=social67574196&adbid=794911171832901632&adbpl=tw&adbpr=63786611#.WB3vIQMclAU.pinterest.

Suh, D H (2020) HOW ARTIST DO HO SUH FULLY REIMAGINES THE IDEA OF HOME, crfashionbook.com. Article published MAY 22, 2020. Available at: https://www.crfashionbook.com/mens/a32626813/do-ho-suh-fully-artist-interview-home-korea/

0 notes

Text

Application Migration to Cloud: Things you should know

Businesses stand a chance to leverage their applications by migrating them to the cloud and improving cost-effectiveness and scaling-up capabilities. But like any other migration or relocation process, application migration involves taking care of numerous aspects.

Some companies hire dedicated teams to perform the migration process, and some hire experienced consultants to guide their internal teams.

Owing to the pandemic, the clear choice to migrate applications is to the cloud. Even though there are still a few underlying concerns about the platform, the benefits outweigh the disadvantages. According to Forbes, by 2021, 32% of the IT budgets would be dedicated to the cloud.

These are some of the interesting insights about the cloud, making it imperative for application migration.

Overview: Application Migration

Application migration involves a series of processes to move software applications from the existing computing environment to a new environment. For instance, you may want to migrate a software application from its data center to new storage, from an on-premise server to a cloud environment, and so on.

As software applications are built for specific operating systems and in particular network architectures or built for a single cloud environment, movement can be challenging. Hence, it is crucial to have an application migration strategy to get it right.

Usually, it is easier to migrate software applications from service-based or virtualized architectures instead of those that run on physical hardware.

Determining the correct application migration approach involves considering individual applications and their dependencies, technical requirements, compliance, cost constraints, and enterprise security.

Different applications have different approaches to the migration process, even in the same environment of technology. Since the onset of cloud computing, experts refer to patterns of application migration with names like:

· Rehost: The lift-and-shift strategy is the most common pattern in which enterprises move an application from an on-premise server to a virtual machine into the cloud without any significant changes. Rehosting an application is usually quicker compared to migration strategies. It reduces migration costs significantly. However, the only downside of this approach is that applications would not benefit from the native cloud computing capabilities without changes. Long-term expenses of running applications in the cloud could be higher.

· Refactor: Also called re-architect. It refers to introducing significant changes to the application to make sure it scales or performs better in the cloud environment. It also involves recoding some parts of the applications to ensure it takes advantage of the cloud-native functionalities like restructuring monolithic applications into microservice, modernization of stored data from basic SQL to advanced NoSQL.

· Replatform: Replatforming involves making some tweaks to the application to ensure it benefits from the cloud architecture. For instance, upgrading an application to make it work with the native cloud managed database, containerizing applications, etc.

· Replace: Decommissioning an application often makes sense. The limited value, duplicate capabilities elsewhere in the environment, and replacement are cost-effective with something new to offer, such as the SaaS platform.

The cloud migration service market value was USD 119.13 billion. It is predicted to reach USD 448.34 billion in the next six years by 2026. A CAGR value of 28.89% is forecasted from 2021 to 2026.

Key Elements of Application Migration Strategy

To develop a robust application management strategy, it is imperative to understand the application portfolio, specifics of security, compliance requirements, cloud resources, on-premise storage, compute, and network infrastructure.

For a successful cloud migration, you must also clarify the key business driving factors motivating it and align the strategy with those drivers. It is also essential to be more aware of the need to migrate to the cloud and have realistic transition goals.

Application Migration Plan

There are three stages of an application migration plan. It is critical to weigh potential options in each stage, such as factoring in on-premise workloads and potential costs.

Stage#1: Identify & Assess

The initial phase of discovery begins with a comprehensive analysis of the applications in the portfolio. Identify and assess each process as a part of the application migration approach. You can then categorize applications based on whether they are critical to the business, whether they have strategic values and your final achievement from this migration. Strive to recognize the value of each application in terms of the following characteristics:

· How it impacts your business

· How it can fulfill critical customer needs

· What is the importance of data and timeliness

· Size, manageability, and complexity

· Development and maintenance costs

· Increased value due to cloud migration

You may also consider an assessment of your application’s cloud affinity before taking up the migration. During the process, determine which applications are ready to hit the floor as it is and the ones that might need significant changes to be cloud-ready.

You may also employ discovery tools to determine application dependency and check the feasibility of workload migration beyond the existing environment.

Stage#2: TCO (Total Cost of Ownership) Assessment

It is challenging to determine the budget of cloud migration. It is complicated.

There will be scenarios like “what-if” to keep the infrastructure and applications on-premise with the ones associated with cloud migration. In other words, you have to calculate the cost of purchase, operations, and maintenance for hardware you want to maintain on the premise in both scenarios, as well as licensing fees.

The cloud provider will charge recurring bills in both cases and migration costs, testing costs, employee training costs, etc. The cost of maintaining on-premise legacy applications should be considered as well.

Stage#3: Risk Assessment & Project Duration

When the final stage arrives, you have to establish a feasible project timeline, identify potential risks and hurdles, and make room.

Stage#4: Legacy Application Migration to The Cloud

Older applications are more challenging to migrate. It can be problematic and expensive to maintain in the long run. They may even present potential security concerns if not patched recently. It may also perform poorly in the latest computing environment.

Migration Checklist

The application migration approach should assess the viability of each application and prioritize the candidate for migration. Consider the three C’s:

· Complexities

- Where did you develop the application – in-house? If yes, is the developer still an employee of the company?

- Is the documentation of the application available readily?

- When was the application created? How long was it in use?

· Criticality

- How many more workflows or applications in the organization depend on this?

- Do users depend on the application daily or weekly basis? If so, how many?

- What is the acceptable downtime before operations are disrupted?

- Is this application also used for production and development and testing, or all the three?

- Is there any other application that requires uptime/downtime synchronization with the application?

· Compliance

- What are the regulatory requirements to comply with?

Application Migration Testing

An essential part of the application migration plan is testing. Testing is vital to make sure no data or capability is lost during the migration process. You should perform tests during the migration process to verify the present data. It ensures data integrity is maintained and data is stored at the correct location.

Testing is also necessary to conduct further tests after the migration process is over. It is essential to benchmark application performance and ensure security controls are in place.

Steps of Application Cloud Migration Process

#1: Outline Reasons

Outline your business objectives and take an analysis-based application migration approach before migrating your applications to the cloud.

Do you want reduced costs? Are you trying to gain innovative features? Planning to leverage data in real-time with analytics? Or improved scalability?

Your goals will help you make informed decisions. Build your business case to move to the cloud. When aligned with key objectives of the business, successful outcomes realize.

#2: Involve The Right People

You need skilled people to be a part of your application migration strategy. Build a team with the right set of people, including business analysts, architects, project managers, infrastructure/application specialists, security specialists, experts in subject matter, and vendor management.

#3: Assess Cloud-Readiness of the Organization

Conduct a detailed technical and business analysis of the current environment, infrastructure, and apps. If your organization lacks the skills, you can consult an IT company to provide an assessment report on cloud readiness. It will give you a deep insight into the technology used and much more.

Several legacy applications are not optimized to be fit for the cloud environments. They are usually chatty – they call other services for information and to answer queries.

#4: Choose An Experienced Cloud Vendor to Design the Environment

Choosing the right vendor is critical to decide the future of work – Microsoft Azure, Google Cloud, and AWS are some of the most popular platforms for cloud hosting.

The apt platform depends on specific business requirements, application architecture, integration, and various other factors.

Your migration team has to decide whether a public/private/hybrid/multi-cloud environment would be the right choice.

#5: Build the Cloud Roadmap

As you get an in-depth insight into the purpose of cloud migration, you can outline the key components to make this move. The first moves are the business priority and migration difficulties. Investigate other opportunities, such as an incremental application migration approach.

Keep on improvising the initial documented reasons to move an application to the cloud, highlight the key areas, and proceed further.

A comprehensive migration roadmap is an invaluable reserve. Map and schedule different phases of cloud deployment to make sure they are on the right track.

Conclusion

The application migration approach can start new avenues for changes and innovations, such as application modernization on the journey to the cloud. Several services are already available to assist enterprise strategies, plans and execute successful application cloud migration. But you must always choose to go for application migration consulting before going onboard.

0 notes

Text

QualityManagement

According to the Center for Business and Economic Research, each $1 generates a $ 3 increase in profit and $16 in cost reduction. Those who work in Quality often refer to the Quality Management System isn't a machine or an application, but is the inherent Quality process architecture on which the company stays. The term"EMS" includes each of the people, stakeholders, processes, and technologies which are involved with a company's Culture of Quality, in addition to the key business objectives which make up its own goals. Quality is both an outlook and a way to increasing customer satisfaction, decreasing cycle time and costs, and Quality management errors and rework employing a set of tools like Root Cause Analysis, Pareto Analysis, etc.. These include procedures, tools and techniques that are used to make certain that the outputs and benefits meet customer requirements.The first element, quality preparation , involves the preparation of an excellent management plan that describes the processes and metrics that will be used. The quality management strategy has to be agreed to ensure that their expectations for quality are correctly identified. The procedures should adapt to culture the processes and values of their host organisation. It validates the use of processes and standards, and ensures staff have the correct understanding, skills and attitudes to fulfill their project roles and duties in a competent manner. Quality assurance has to be independent of the project, developer or portfolio to which it applies.The next part, quality management , is composed of inspection, measurement and testing. It verifies that the deliverable adapt to specification, are fit for purpose and meet stakeholder expectations.

Quality management actions determine whether approval criteria have, or have not, been met. In order for this to be effective, specifications must be under strict configuration control. It is possible that, once agreed, the specification might need to be modified. Commonly this will be to adapt change requests or issues, while maintaining acceptable time and price constraints. A P3 maturity model provides a framework against which continuous progress embedded and can be initiated into the organisation. Projects which are part of a programmed may well have a lot of the quality management plan developed at developer degree to make sure that standards are in accord with the rest of the programmer. This might appear to be an administrative burden on day one of projects that are smaller, but is rewarding from the end. Projects deliver outputs which are subject to forms of quality management, Quality management upon the technical nature of the work and codes affecting particular sectors. Examples of scrutinizing Collars include crushing samples of concrete used in the bases of a building; x-rating welds in a boat's hull; and following the evaluation script to get a fresh piece of software.Inspection produces tools and data such as scatter diagrams, control charts, flowcharts and cause and effect diagrams, all which help to understand the caliber of work and how it might be improved. The main contribution to constant improvement that may be made within the timescale of a project is by way of lessons learned. Existing lessons learned should be consulted at the beginning of every job, and any lessons utilized in the preparation of this project documentation.

In the end of each project, the lessons learned should be documented as part of the post-project inspection and fed back into the understanding database.The duty of the programmer management team is to create a quality management plan that encompasses the diverse contexts and technical requirements contained within the developer. This sets the standards for the job quality management also functions as a plan for quality in the advantages realization parts of the programmer and programs. A comprehensive quality management plan at programmer degree can greatly reduce the effort involved in preparing project-level quality management plans. Quality management of outputs is mainly handled at job level, however, the developer may get involved where an output from one project is an input to another, or where extra review is needed when outputs from two or more jobs are delivered . The developer is responsible for quality management of advantages. This is a complex task since the acceptance criteria of an advantage may cover subjective as well as measurable factors but benefits should be defined in measurable terms to ensure quality management can be applied.The average scale of programmed signifies that they have a very useful role to play in continuous progress. Programmer assurance will ensure that jobs do take lessons learned into account and then capture their lessons along with this understanding database.The very nature of a portfolio means that it is unlikely to need a portfolio grade management program.

0 notes

Text

Approaches to Internal Revenue Service Tax Obligation Financial Obligation Alleviation

"This English word comes from Latin Taxo, ""I estimate"". Taxing consists in imposing a monetary cost upon a person. Not paying is generally culpable by law. They can be identified as indirect taxes (a cost imposed straight on a person and gathered by a greater authority) or indirect taxes (troubled items or solutions as well as eventually paid by consumers, a lot of the moment without them recognizing so). Purposes of taxation The initial unbiased tax ought to accomplish is to drive human growth by offering health and wellness, education and also social security. This purpose is additionally very crucial for a steady, effective economy. A 2nd goal and also a consequence of the very first is to reduce poverty as well as inequality. Typically, individuals making more are proportionally exhausted much more as well.

The furtherance of the individual revenue tax in the United States has a prolonged - and some would claim unsteady - history. The Starting Fathers consisted of specific speech in the Constitution regarding the authority of the Federal Federal government to exhaust its residents. Specifically, Article 1; Section 2, Condition 3 states

Government tax obligation is utilized for satisfying the expense both earnings and also capital. Earnings expenditure goes in the direction of running the federal government and in the direction of gathering the government tax obligation. Capital expenditure goes towards building the facilities, funding assets and also various other types of financial investment generating long-term returns and benefits to people. It needs to always be the endeavor of the government to fulfill its earnings expense out of government existing taxes and build possessions at the same time for long-term view.

Last, of all, it is to be said that, a service requires a Government Tax obligation Identification Number or Employer Tax Identification Number so that they can keep their very own photo or entity in the marketplace. It is to be kept in mind that, the tax ID number might not be moved in case of the moving of any service. If the structure or ownership would certainly be changed after that a brand-new tax obligation ID number is needed for the business. But above all, you have to collect the pertinent information to obtain an EIN.

The courts have actually usually held that direct taxes are restricted to tax obligations on individuals (variously called capitation, poll tax or head tax obligation) and property. (Penn Mutual Indemnity Co. v. C.I.R., 227 F. 2d 16, 19-20 (third Cor. 1960).) All other taxes are generally described as ""indirect taxes,"" because they strain an occasion, rather than an individual or home per se. (Guardian Machine Co. v. Davis, 301 U.S. 548, 581-582 (1937 ).) What seemed to be a straightforward constraint on the power of the legislature based upon the subject of the tax obligation proven inexact as well as uncertain when related to an income tax, which can be perhaps checked out either as a direct or an indirect tax.

youtube

Definitions of Tax Mitigation Evasion and Evasion It is difficult to share an accurate examination as to whether taxpayers have prevented, evaded or merely reduced their tax obligation responsibilities. As Baragwanath J said in Miller v CIR; McDougall v CIR: What is legit 'reduction'(suggesting evasion) and what is bogus 'avoidance'(suggesting evasion) is, in the long run, to be determined by the Commissioner, the Taxation Review Authority and also ultimately the courts, as a matter of judgment. Please note in the above declaration the words are precise as mentioned in the judgment. However, there is a mix-up of words that have actually been cleared up by the words in the brackets by me. Tax Obligation Mitigation (Evasion by Planning) Taxpayers are entitled to alleviate their obligation to tax and will certainly not be at risk to the general anti-avoidance rules in a statute. A summary of tax obligation mitigation was offered by Lord Templeman in CIR v Difficulty Business Ltd: Income tax obligation is reduced by a taxpayer who reduces his income or incurs expenditure in scenarios which reduce his assessable revenue or entitle him to a reduction in his tax obligation liability.

Over the years lots of have actually enjoyed countless examples of such tax arbitrage making use of components in the regulations at the time. Examples are money leasing, non-recourse borrowing, tax-haven(a country or marked area that has low or no tax obligations, or highly deceptive financial institutions as well as usually a warm climate and sandy beaches, that make it eye-catching to foreigners bent on tax avoidance and also evasion) 'investments' and also redeemable choice shares. Low-tax policies gone after by some countries in the hope of bring in global companies as well as resources are called tax competition which can give an abundant ground for arbitrage. Economists normally prefer competition in any kind. Yet some claim that tax competitors is commonly a beggar-thy-neighbor plan, which can decrease an additional nation's tax base, or compel it to transform its mix of taxes, or stop it straining in the way it would like.

Tax collection is done by a company that is specifically designated to perform this feature. In the USA, it is the Irs that does this feature. There are charges entailed for failing to comply with the regulations and policies set by controling authorities relating to taxes. Charges might be enforced if a taxpayer falls short to pay his taxes in full. Charges may be civil in nature such as a penalty or loss or may be criminal in nature such as incarceration. These charges may be troubled an individual or on an entity that fall short John Du Wors Attorney to pay their tax obligations in full.

Financial institutions were the initial to impose service tax on their clients. From the moment of their beginning, they regularly expressed solution expenses in the form of processing fees. The duty of accumulating the levy is left with the Central Board of Import Tax and Traditions (CBEC), which is an authority under the Ministry of Financing. This authority develops the tax obligation system in India."

0 notes

Text

Exactly how To Save Dollars with Fortnite Free V Bucks?

What Is Fortnite Then The Digital Currency V

Fortnite is a house sandbox survival video game developed by Citizens May Journey with Epic Games. This is a freshly discovered space in a person from the Epic Games forums - it seems a number of Fortnite "movie" cases were actually damaged or corrupted. According to Marksman, selling Fortnite codes is a safer choice than selling broken-into accounts, although the accounts might be more beneficial (one seller I converse with was selling an bill with few skins for $900). Participants can heal stolen story in contacting Epic Games' leg with adjusting their information. The regulations are immaterial.

The Fortnite World Cup Online tournaments get started in April 13 and will run every week for twenty weeks around every place. The semi-finals session takes place on the Saturday and is three times long, with a 10 match limit. If you place in the top 3000 players, you'll be able to take cut from the closing by Saturday. Fortnite Week 1 Problems of the Period 8 Battle State happen now, like go to all Pirate Camps record with giant face locations. Clear at least some on the several problems to gain 5,000XP. This set was generated on Feb 28, 2019.

These virtual coins can be purchased on the public Fortnite store as well as vendors including Microsoft and COMPETITION. Still, with 1,000 coins costing roughly $10, there is a market for discounted coins which are eagerly took in place beside persons. Now this is a very indirect problem I have asked. Simply because Fortnite is simply a single kind of competition so most games could really understand Fortnites model could they? Or could they? I think they can. That clearly requests to online games, particularly activities like Name of Payment and FIFA.

Fortnite is control without V-Bucks, vbucks.codes presented me with countless total of v-bucks, to enjoy all of Roblox. While there is a copyright struggle against the game, it now appears Fortnite is sound, for now. Bloomberg records that PUBG Corp. delivered "a note of drawback" to Epic Games lawyers by Saturday, and that the circumstances is right now met. While that rests unclear just how much cash criminals have gotten to make through Fortnite, over $250,000 were received in Fortnite articles in eBay in a two-month period last year. Think from Sixgill also present an increase in the number of mentions from the competition on the black confusion, with point link with the game's revenue.

This swagbucks link will allow that you get a free 3$ importance of positions when you earn only 3$ worth. It will allow you to get at least the nice stuff in fortnite. Squad up with fortnite v bucks your friends and get a Xbox One X 1TB console, Xbox wireless controller, Fortnite Battle Royal, Legendary Eon cosmetic set, with 2,000 V-Bucks. How For Free V Dollars In Fortnite? Here is really the dilemma that Suddenly being augmented through the many Fortnite Game Players. The primary purpose is because; with V Bucks, it is possible to readily access most from the items in Fortnite game.

Part of Fortnite's growth to authority has no doubt been their cross-platform availability, with everyday mobile gamers on the move able to get involved with great bedroom gamers in equal footing ( Sony was hesitant , but cross-play functionality has lately been helped for PS4 players ). Most from the FORTNITE V BUCKS GENERATOR websites out there are try to assure people to somehow their developers managed to hack into the FORTNITE Database, and thus they can easily acquire the unlimited free v-bucks in Combat Royale game.

Figure 1: Data demonstrates the estimated revenue of Fortnite compared to PlayerUnknown's Battlegrounds between August 2017 — June 2018, based on the Edison Trends dataset. While you can't directly gift V-Bucks to another person, you have a several options to help them get their Fortnite fix: purchase them a gift license for the software of choice, or buy a bundle with limited information.

VBUCKS - Relax, It's Play Time

Once the recording starts, you'll see a preview in the upper-right area regarding your game (which you can minimise, if you want). This survey window enables people suddenly toggle the microphone and webcam happening then sour, and you can also click on the "chat" connect to witness exactly what everyone is claim around your terrible Fortnite death streak. If you're question what items come in the Fortnite shop today, about the day to you're looking at that, you can control onto your Fortnite Battle Royale shop items guide So be updating that web site every generation, to suggest all the make new things to Epic gets to the Fortnite store.

If you want help with receiving the fortnite exchange in xbox living or playstation or microsoft marketplace then talk about the blog. If you cannot see and struggling to download fortnite game then write to us right now to get bear on the generator. V-Bucks are the most popular in-game get for Fortnite players, finishing up 83% of things bought and 88% of committing. While different V-Bucks amounts are intended for hold, the sole most popular Fortnite thing is 1,000 V-Bucks for $9.99, accounts for 53% of articles purchased and 33% of use.

Credit for enjoying the Fortnite: Battle Royale & Fortnite: Save The planet videos! Want much more? I post daily Fortnite videos or anything interesting for Fortnite Battle Royale. Fortnite's primary also much less popular horde mode offers daily login bonuses, daily obstacles, and prizes for Storm Shield Defense missions. They become bright and relaxed ways to get a small sum of currency each day, although you'll should actually acquire the form.

Being the best at Fortnite Battle Royale is no simple accomplishment, and there's no reliable way to achieve a Win Royale every time. Yet, while we can't guarantee you'll end from the best five whenever you enjoy, the point to performing Fortnite Battle Royale should help you out-survive your peers often than not. That important to learn where chests are placed in Fortnite - Battle Royale. That gives you the immense advantage. You can remember every individual spot but the easier approach is to discover them on this effort.

All the Fortnite Battle Royale tips you need, plus Fortnite Android info, free V Bucks, and Fortnite Server status updates. Fortnite is the best battle royale video competition of 2018. PUBG is a similar game, although it is quality is much less than Fortnite's. The essential subject is whether the parties used in Fortnite emotes are copyrightable material kept under US law. If not, then Epic Games' use of the parties is not copyright infringement, and in-game purchase of the particular parties can continue unfettered.

The Secrets To VBUCKS